Preference-based Interactive Multi-Document Summarisation

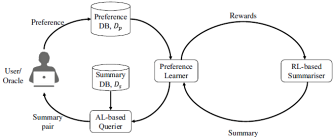

Abstract. Interactive NLP is a promising paradigm to close the gap between automatic NLP systems and the human upper bound. Preference-based interactive learning has been successfully applied, but the existing methods require several thousand interaction rounds even in simulations with perfect user feedback. In this paper, we study preference-based interactive summarisation. To reduce the number of interaction rounds, we propose the Active Preference-based ReInforcement Learning (APRIL) framework. APRIL uses Active Learning to query the user, Preference Learning to learn a summary ranking function from the preferences, and neural Reinforcement Learning to efficiently search for the (near-)optimal summary. Our results show that users can easily provide reliable preferences over summaries and that APRIL outperforms the state-of-the-art preference-based interactive method in both simulation and real-user experiments.